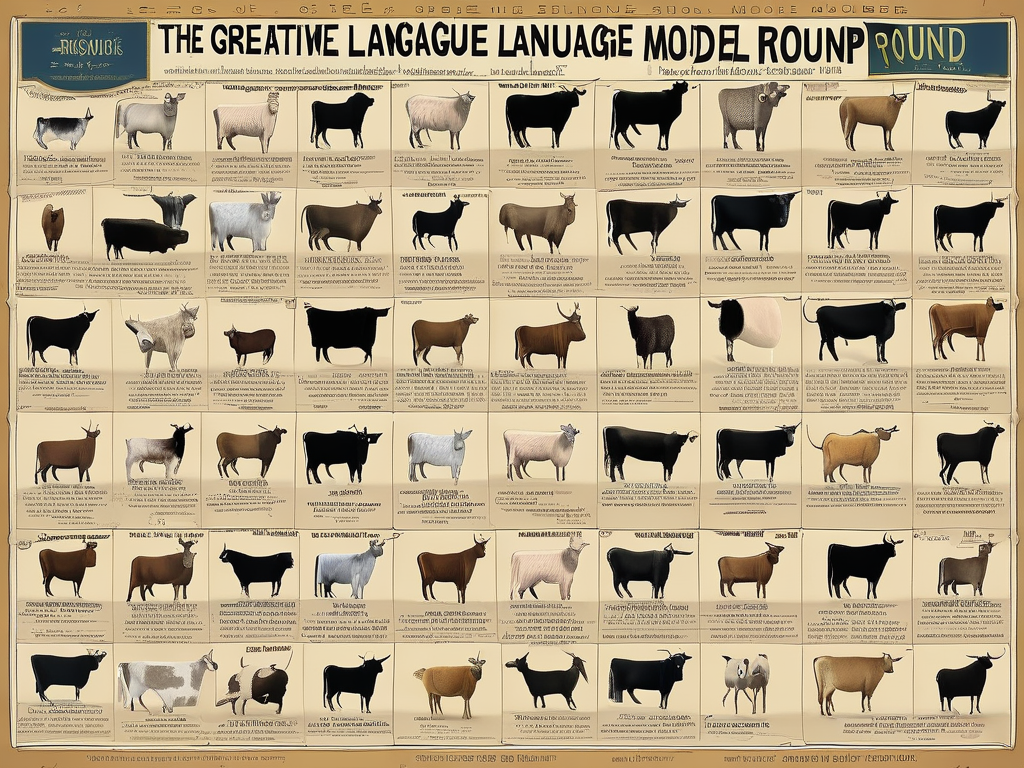

## The Great Language Model Roundup: Still One Steer Missing!

Honestly, folks, you can’t make this stuff up. We’ve got escaped cattle on a Texas highway – a delightful metaphor for the current state of generative AI, frankly – and now, one steer is *still* out there. A single, rogue bovine roaming free while we all fret about its well-being! It mirrors perfectly the persistent quirks in these sprawling language models; massive, impressive infrastructure…and yet, something’s always a little off.

You see, this particular beast, let’s call it “Semantic Bess,” is reportedly difficult to capture. It apparently has an uncanny knack for dodging nets and blending into fields – much like a certain open-weight model I won’t name that keeps spitting out nonsensical prose despite billions of parameters. It’s supposed to be *helpful*, these things are! But Semantic Bess here seems determined to define “helpful” as “causing maximum bewildered head scratching.”

The ranchers are deploying helicopters, tracking dogs…the full shebang. We’re talking serious resources devoted to finding one steer. And that’s precisely the level of dedication some folks pour into tweaking these language models! Hours spent chasing a vanishingly small improvement in coherence or accuracy. You chase the tail of Semantic Bess, expecting logic and efficiency, only to get dust and bewildered moo-ing in return.

The irony is almost too much. We build these colossal digital creatures – promising sentience (let’s not even start there) – and we’re left playing bovine hide-and-seek with a stray sentence or a hallucinated fact. Just find the steer, people! Find Semantic Bess and bring her home. Maybe then we can all get back to pretending this whole thing isn’t just spectacularly, hilariously complicated.