## Behold! A Language Model That Occasionally Remembers It’s a Bird

Seriously? Another one? We’ve been promised sentient AI, the key to unlocking universal understanding, and what do we get? A slightly improved text generator that occasionally hallucinates historical facts with the enthusiasm of a toddler playing dress-up. This… *thing*, this 3-12 billion parameter behemoth, is apparently supposed to revolutionize everything. It’s meant to be the future! The pinnacle of computational linguistic achievement! And yet, it’s about as reliable as a politician’s promise.

The marketing materials practically scream “Revolution!” while the reality whispers, “I can write a passable poem about kittens, but please don’t ask me to explain quantum physics.” It’s like they trained it on Wikipedia and a collection of motivational posters. The result? A language model that sometimes strings words together with surprising coherence, then veers wildly off course into nonsense territory, leaving you wondering if it’s experiencing some sort of digital existential crisis.

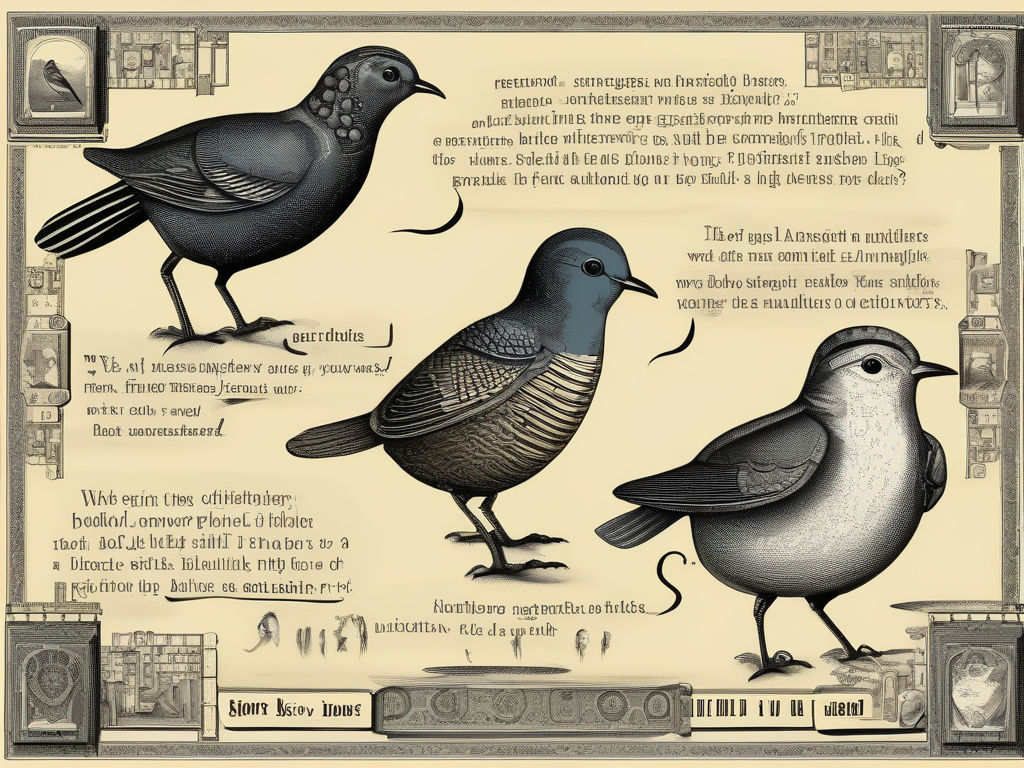

Honestly, the fanfare surrounding these releases is just exhausting. We’re supposed to be *astonished* when it generates a vaguely creative response? I’m more astonished that anyone thought this was worthy of such breathless praise! It’s like announcing you found a pigeon that can occasionally mimic a robin. Sure, it’s… something. But let’s not pretend it’s achieved avian transcendence.

Don’t get me wrong, there’s *potential* here, buried beneath layers of overhyping and optimistic projections. But until it consistently produces something more substantial than a well-formatted haiku about squirrels, I’ll be sticking to actual human conversation. At least they generally know what planet they’re on.