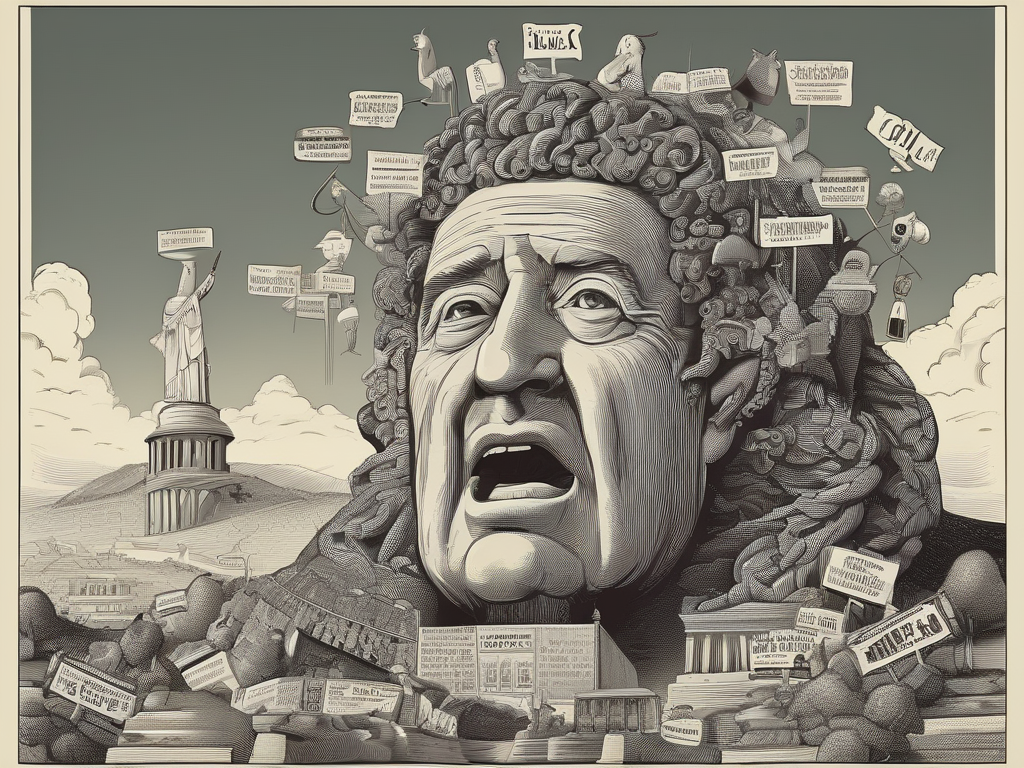

## Behold, The Linguistic Colossus: A 3.12 Billion Parameter Nightmare

Right, let’s talk about this thing. This… *creation*. Apparently, someone decided that existing language models weren’t sufficiently ludicrous and birthed a 3.12 billion parameter beast into the world. Because clearly, we needed another digital entity capable of stringing together coherent sentences while simultaneously consuming enough processing power to keep a small city lit. Wonderful. Just what we all wanted.

I mean, seriously? Three *point* one two *billion* parameters? It’s practically begging for existential dread. Imagine the training data! Mountains of text meticulously curated and fed into this behemoth, only so it could learn how to politely request you buy more cat food or explain the intricacies of quantum physics with a vaguely unsettling enthusiasm.

And what’s the point? We already have models that can write poems about squirrels wearing tiny hats. Do we *really* need one capable of generating a dissertation on the socio-economic impact of competitive ferret grooming? I suspect not. It’s like building a rocket to deliver a single, slightly stale, biscuit across town.

The developers are probably patting themselves on the back, celebrating its “remarkable capabilities.” Remarkable in what way, precisely? Remarkably good at mimicking human conversation until you realize it’s just spitting out statistically probable word combinations? Remarkably efficient at creating an unsettling feeling of being watched by a digital void?

Honestly, I’m starting to think this is all some elaborate performance art piece. A commentary on our collective obsession with size and complexity. Or maybe someone simply lost a bet. Whatever the reason, I’m off to contemplate the inherent absurdity of existence while listening to whale songs and avoiding anything with more than three syllables. It feels safer.